Behind the scenes

Game on: inspiring vocational training at Galaxus

by Daniel Steiner

In software development, we make decisions based on data. A/B tests help us to do this. As we were not satisfied with the external testing tool, we quickly developed our own A/B testing tool.

For a good three months now, digitec and Galaxus customers have been able to shop in a climate-neutral way. This is made possible

climate compensation in the check-out. But not all customers were able to offset right from the start. We carried out an A/B test in the first two weeks. Some of the shoppers saw the familiar A variant without the CO2 compensation option in the check-out. The other group saw the previously unknown B option with the option to offset their CO2 emissions. We wanted to find out how our customers responded to the CO2 compensation feature.

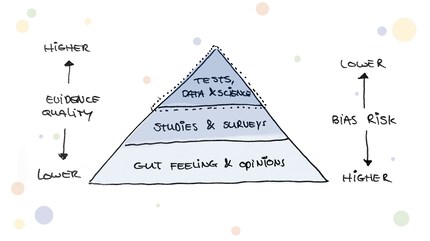

Such A/B tests are now an established tool in software development to measure the effectiveness of new functionalities. According to Google, just 30% of all A/B tests are positive. This in turn means that without testing, there is a 70% chance of making a change that has a negative impact.

Even if the basic principle of A/B testing is quickly understood, the subject area harbours a great deal of complexity: starting with a solid test hypothesis, through correct set-up and tracking, to evaluation and the formulation of follow-up hypotheses. For this reason, there are countless testing tools on the market that simplify certain aspects of A/B testing for software teams or even take them off their hands completely. Until now, we at Digitec Galaxus also used an external testing tool. With increasing testing experience and an overall increasing learning curve in our engineering crew, we reached a point where the development teams had various problems with the use of the previous tool. This is where things got stuck:

And we didn't want to compromise on our culture of experimentation either: We want to determine the playout ourselves in our A/B tests and offer our users the fastest possible tests.

Within a short space of time, we had a compact and motivated group ready to tackle the problem. The group was a colourful mix: software engineers, an analyst, UX researchers and product owners were all highly motivated to tackle the topic.

The first question we asked ourselves was what options we had:

Together with two external A/B testing experts, we got to the bottom of the performance problems and carried out a small audit. We quickly realised that it wouldn't be rocket science to build our own tool according to our requirements. It would also give us full transparency and control over the tool and allow us to develop it further according to our requirements in future.

The development of the new tool then began. However, the developers did not abandon everything, but invested a few minutes every now and then alongside their primary work. And lo and behold, within three weeks, the first prototype was ready for its first test. We then used various tests (AA tests) to validate the plausibility of the data and the test results. After we were able to put a green tick behind the validation, we started the first end customer test after a total of six weeks. We were all eager to see the initial results and whether the new tool would hold up. But to our relief, there were no nasty surprises and the first test went through without a hitch!

Five months have passed since then and we have already run dozens of tests using our tool. There are still challenges. But because we have full control over our tool, we can react quickly and develop the tool according to the needs of our teams. Over time, the colourful and motivated engineering crew has developed into a well-established group that continues to drive the topic of AB-Testing forward within the company.

Would you also like to solve problems in an uncomplicated way? Then take a look at our job adverts:our vacancies

Fast learning through small but valuable steps is the key to successful products.

News about features in our shop, information from marketing and logistics, and much more.

Show all