AI converts language into portraits - MIT researchers achieve amazing feat

Researchers at the Massachusetts Institute of Technology (MIT) unveiled an algorithm in 2019 that today delivers surprising results thanks to artificial intelligence (AI). The AI creates a corresponding face based on a voice recording.

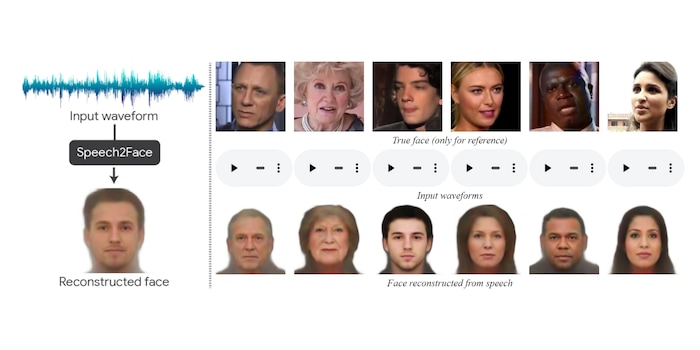

The results are not yet perfect, but in some cases they are already damn close to the original face. An AI trains itself based on YouTube videos and an algorithm called Speech2Face. The algorithm was written by AI scientists at MIT's Computer Science and Artificial Intelligence Laboratory (CSAIL). They created an AI that converts three-second speech recordings into portraits based on set parameters and its accumulated experience.

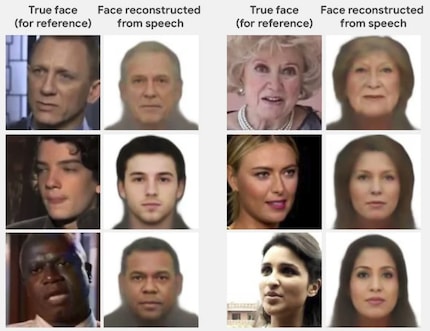

Many of the results bear a strong resemblance to the human behind the voice.

How Speech2Face works

The researchers achieved this by "feeding" the artificial neural network with millions of YouTube videos. Videos of people speaking in front of the camera. The AI was tasked with finding sound features that could be associated with certain facial features and other characteristics. Without external help, the AI learned to infer age, gender, ethnicity and more - and to generate portraits from the information.

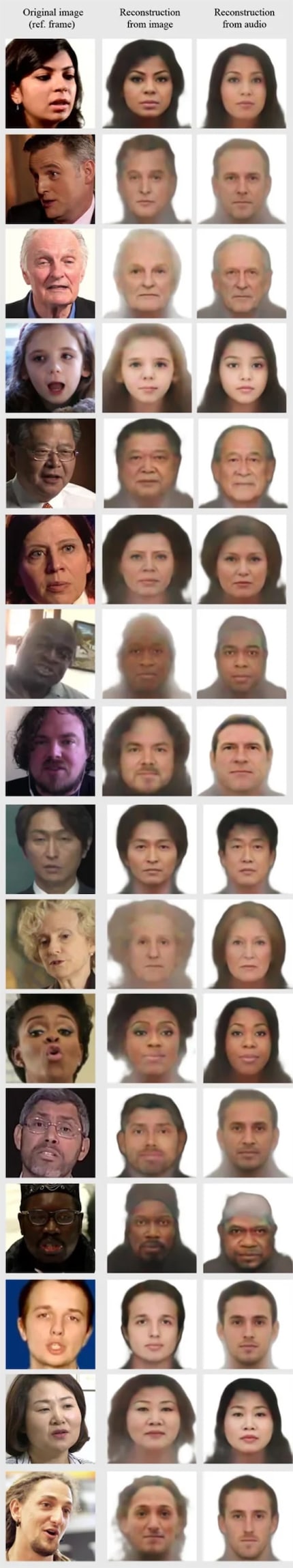

The researchers also developed a face decoder that reconstructs a frontal portrait from a still YouTube image. The software does this regardless of the lighting and pose of the person photographed. They are used to verify the frontal portraits created by Speech2Face. In the process, impressive results come together.

On the far left you see the Youtube still image. In the middle, the test image generated from the Youtube still image for verification. And on the right, the image generated purely from a short voice recording.

By the way, longer speech recordings lead to a better result. The researchers demonstrate this with the following examples, which were created from three- and six-second audio snippets.

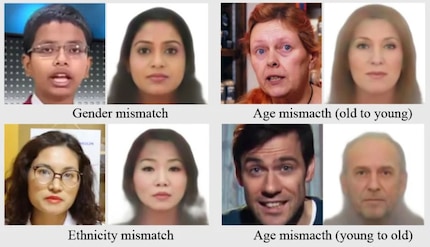

The AI currently still has problems with high-pitched male voices, which are often interpreted as female. In addition, Asian men who speak American English receive portraits that resemble white men. If the same person speaks in his or her native language, the correct ethnicity is assigned.

The researchers hope to get even more accurate results by providing more training data that is more representative of the entire global population. They are aware that AI currently struggles with racial bias and are trying to address this grievance.

What about privacy?

Even though scientific reasons are behind this project, questions arise about misuse. To that end, the researchers say their system is not capable of revealing a person's true identity based on their voice. They say the AI is trained to detect features common to many people based on voice input. This results in average-looking faces with typical visual characteristics.

Only time will tell if this will continue. If you want to listen to how the original voice recording sounds to a generated image, you can find examples here.

I find my muse in everything. When I don’t, I draw inspiration from daydreaming. After all, if you dream, you don’t sleep through life.

From the latest iPhone to the return of 80s fashion. The editorial team will help you make sense of it all.

Show all